How swift is Swift compared to C++?

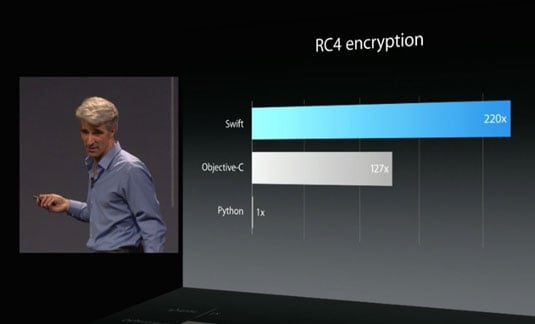

Merriam-Webster defines swift as “moving or capable of moving at great speed”. Apple also presented similar promises at their WWDC 2014 keynote presentation, where Swift was first released. In one of the slides there was a comparison for RC4 encryption that Swift did 220x faster than Python and almost 2x faster than Objective-C.

What about the classical behemoth of performance C++? Which one of these two languages takes the cake when it comes to calculating the Collatz conjecture for numbers under 1 million? Let’s find out!

I made an effort to keep the solutions as similar as possible to have a fair comparison. First I will show you the full source code of both solutions and finally the results.

C++ Solution #

#include <iostream>

#include <sys/time.h>

int main(int argc, const char * argv[])

{

// Take starting time for performance measurement

struct timeval start, end;

gettimeofday(&start, NULL);

// Define the range for testing

const int FROM = 2;

const int UNTIL = 999999;

// Save sequence lengths in array for performance gains

const int ARRAY_SIZE = 1000000;

int sequenceArray[ARRAY_SIZE];

// Initialize all to -1

for (int i = 0; i < ARRAY_SIZE; ++i)

{

sequenceArray[i] = -1;

}

sequenceArray[1] = 0;

// Loop each number in the range

for (int number = FROM; number < UNTIL; ++number)

{

long value = number;

int sequence = 0;

// If previously calculated, no need to recalculate

bool notFound = sequenceArray[value] < 0;

while (notFound)

{

// Actual calculation of the Collatz conjecture

value = value % 2 == 0 ? value / 2 : value * 3 + 1;

++sequence;

// Need to set true for those outside range to avoid runtime error

notFound = (value < (ARRAY_SIZE - 1)) ? (sequenceArray[value] < 0) : true;

}

sequence += sequenceArray[value];

sequenceArray[number] = sequence;

}

// Now find out which one had the longest sequence

int valueWithLongestSequence = 0;

int longestSequence = 0;

for (int number = FROM; number < UNTIL; ++number)

{

if (sequenceArray[number] > longestSequence)

{

longestSequence = sequenceArray[number];

valueWithLongestSequence = number;

}

}

// Performance comparison code

gettimeofday(&end, NULL);

printf ("time = %d ms\n", (end.tv_usec - start.tv_usec) / 1000);

// Output result

std::cout << "DONE! Value " << valueWithLongestSequence << " has longest sequence " << longestSequence << ".\n";

return 0;

}

Swift Solution #

See more information about the Swift solution here:

http://swift.svbtle.com/calculating-the-collatz-conjecture-with-swift

// Import Foundation for taking execution time with NSDate

import Foundation

// Take starting time for performance measurement

var start = NSDate()

// Define the range for testing

let FROM = 2

let UNTIL = 999_999

// Save sequence lengths in array for performance gains

let ARRAY_SIZE = 1_000_000

let stepsArray = Array(count: ARRAY_SIZE, repeatedValue: -1)

stepsArray[1] = 0

// Loop each number in the range

for number in FROM...UNTIL

{

var value = number

var steps = 0

// If previously calculated, no need to recalculate

var notFound = stepsArray[value] < 0

while (notFound)

{

// Actual calculation of the Collatz conjecture

value = value % 2 == 0 ? value / 2 : value * 3 + 1

++steps

// Need to set true for those outside range to avoid runtime error

notFound = value < (ARRAY_SIZE - 1) ? stepsArray[value] < 0 : true

}

steps += stepsArray[value]

stepsArray[number] = steps

}

// Now find out which one had the longest sequence

var longestSequence : (Int, Int) = (0, 0)

for number in FROM...UNTIL

{

if stepsArray[number] > longestSequence.1

{

// Set longest sequence tuple to hold the number and sequence length

longestSequence = (number, stepsArray[number])

}

}

// Performance comparison code

var end = NSDate()

var timeTaken = end.timeIntervalSinceDate(start) * 1000

println("Time taken: \(timeTaken) ms.")

// Output result

println("DONE! Value \(longestSequence.0) has longest sequence \(longestSequence.1)")

Performance evaluation #

As you notice the solution used is identical, the code even looks very similar. The performance was measured using an Early 2011 Macbook Pro with a 2,2 Ghz i7 processor. The operating system used was the beta OS X 10.10 Yosemite and both solutions were compiled with the XCode 6.0 beta. Compiler for C++ was Apple LLVM 6.0.

I first ran both applications directly from the XCode development view four times to gain confidence in the results.

| Swift | C++ | |

|---|---|---|

| Run 1 | 15165 ms | 157 ms |

| Run 2 | 14942 ms | 156 ms |

| Run 3 | 14863 ms | 164 ms |

| Run 4 | 14679 ms | 151 ms |

The performance difference was in fact quite stunning. On average C++ was approximately 100x faster. Swift ran for approximately 15 seconds, while C++ only took approximately 150 milliseconds.

It’s of course good to remember here that C++ has been actively developed for around 31 years and as such has evolved into quite a performance beast, whereas Swift was only released 2 weeks ago.

But then I found some comments here indicating that the performance gap can be bridged by changing the compiler options of Swift: https://stackoverflow.com/questions/24101718/swift-performance-sorting-arrays

Let’s investigate!

I next compiled and ran the Swift application with the available compiler options. For C++ it seemed like there was no substantial difference between the different optimizations (O, O2, O3, Ofast) as long as they were enabled.

| Language | Swift | Swift | Swift | C++ | C++ |

|---|---|---|---|---|---|

| Compiler Option | None | Fastest | Fastest, Unchecked | None | Fastest |

| Run 1 | 16680 ms | 983 ms | 38 ms | 164 ms | 35 ms |

| Run 2 | 17057 ms | 1030 ms | 54 ms | 180 ms | 27 ms |

| Run 3 | 16971 ms | 1004 ms | 35 ms | 172 ms | 18 ms |

| Run 4 | 17035 ms | 1036 ms | 41 ms | 206 ms | 19 ms |

Performance crown seems to stand firmly in the C++ corner as things are today. With no compiler optimization C++ beats Swift with a whopping 100x factor. When compiler optimizations are enabled C++ still takes only half the time of Swift in this particular test.

All this said, there are more things than performance to a language and so far Swift has certainly been a pleasure to learn and there many small things it does do smarter than C++.

EDIT: It was pointed out to me that C++ was using 32-bit integers, while Swift was using 64-bit. After changing the C++ implementation to match with regard to this I re-ran and found that the times increased to the range of 31-46ms with -O3 and 29-33ms with -Ofast. It seems that the margin is certainly small in this test and it’s possible that the small differences in code account for the rest of the difference. Fundamentally for this test, the solutions seem more or less equally fast.